What is Kubernetes?

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It was originally developed by Google and is now maintained by the Cloud Native Computing Foundation (CNCF).

With Kubernetes, you can deploy containerized applications across multiple nodes, and manage and scale them more easily. Kubernetes provides a range of features such as load balancing, auto-scaling, self-healing, and rolling updates, which allow you to manage and maintain containerized applications with minimal downtime and manual intervention.

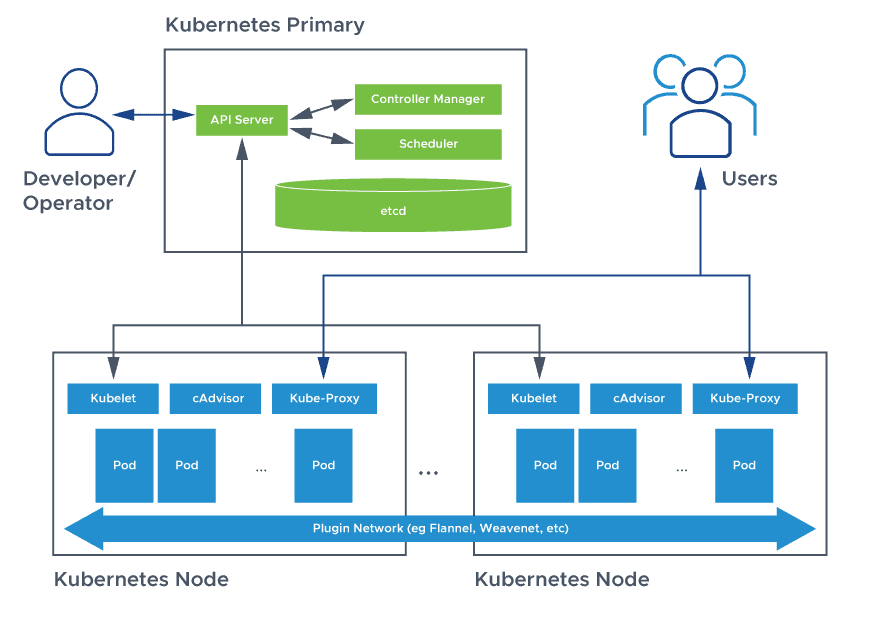

Kubernetes - Cluster Architecture

The architecture of Kubernetes is divided into several components that work together to provide a scalable and resilient platform for managing containerized applications. Here are the main components:

Master node/Control Plane:

The master node is the control plane of Kubernetes and manages the overall state of the cluster. It includes several components:

etcd: A distributed key-value store that stores the configuration and state of the cluster.

API server: The main endpoint for managing the cluster, which provides a RESTful API that clients can use to interact with Kubernetes.

Controller manager: A collection of controllers that are responsible for maintaining the desired state of the cluster, such as ensuring that the correct number of replicas of a service are running.

Scheduler: A component that schedules pods to run on worker nodes based on their resource requirements and availability.

Worker node:

The worker nodes are the compute nodes in the cluster where the containers run. Each worker node includes several components:

Kubelet: An agent that runs on each worker node and is responsible for managing the containers on that node.

Container runtime: The software that runs the containers, such as Docker or containerd.

kube-proxy: A network proxy that forwards traffic to the correct container.

Pods:

A pod is the smallest deployable unit in Kubernetes and represents a single instance of a running process in the cluster. A pod can contain one or more containers, which share the same network namespace and can communicate with each other through localhost.

Services:

A service is an abstraction that represents a set of pods and provides a stable IP address and DNS name for clients to access them. Services can also provide load balancing and automatic failover.

Volume:

A volume is a directory that can be mounted inside a container, allowing it to access persistent storage.

Namespace:

A namespace provides a way to partition the resources in a cluster and is used to separate different applications or environments.

Working of Kubernetes

It is a collection of various components that help us in managing the overall health of a cluster. For example, if you want to set up new pods, destroy pods, scale pods, etc. 4 services run on Control Plane:

Kube-API server : The API server is a component of the Kubernetes control plane that exposes the Kubernetes API. It is like an initial gateway to the cluster that listens to updates or queries via CLI like Kubectl. Kubectl communicates with API Server to inform what needs to be done like creating pods or deleting pods etc. It also works as a gatekeeper. It generally validates requests received and then forwards them to other processes. No request can be directly passed to the cluster, it has to be passed through the API Server.

Kube-Scheduler : When API Server receives a request for Scheduling Pods then the request is passed on to the Scheduler. It intelligently decides on which node to schedule the pod for better efficiency of the cluster.

Kube-Controller-Manager : The kube-controller-manager is responsible for running the controllers that handle the various aspects of the cluster’s control loop. These controllers include the replication controller, which ensures that the desired number of replicas of a given application is running, and the node controller, which ensures that nodes are correctly marked as “ready” or “not ready” based on their current state.

etcd : It is a key-value store of a Cluster. The Cluster State Changes get stored in the etcd. It acts as the Cluster brain because it tells the Scheduler and other processes about which resources are available and about cluster state changes.

Also following are the nodes where the actual work happens. Each Node can have multiple pods and pods have containers running inside them. There are 3 processes in every Node that are used to Schedule and manage those pods.

Container runtime : A container runtime is needed to run the application containers running on pods inside a pod. Example-> Docker

kubelet : kubelet interacts with both the container runtime as well as the Node. It is the process responsible for starting a pod with a container inside.

kube-proxy : It is the process responsible for forwarding the request from Services to the pods. It has intelligent logic to forward the request to the right pod in the worker node.

Kubernetes Installation and Configuration

To install the Kubeadm tool visit here .

Go through the below steps of installation based on your requirements.

Execute the below commands on both the Master and Worker node.

######### Both Master & Worker Node sudo apt update -y sudo apt install docker.io -y sudo systemctl start docker sudo systemctl enable docker sudo curl -fsSLo /usr/share/keyrings/kubernetes-archive-keyring.gpg https://packages.cloud.google.com/apt/doc/apt-key.gpg echo "deb [signed-by=/usr/share/keyrings/kubernetes-archive-keyring.gpg] https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list sudo apt update -y sudo apt install kubeadm=1.20.0-00 kubectl=1.20.0-00 kubelet=1.20.0-00 -yExecute the below commands on the Master node.

sudo su kubeadm init mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config kubectl apply -f https://github.com/weaveworks/weave/releases/download/v2.8.1/weave-daemonset-k8s.yaml kubeadm token create --print-join-commandExecute the below commands on the Worker node.

sudo su kubeadm reset pre-flight checks #Paste the output(token) of Join command from master to worker node with `--v=5`Execute the below commands on the Master node.

kubectl get nodesNote: Create Inbound rule on master with port given in token(output of join command on master)

Thank you ... Happy Learning

#KubeWeek challenge #devops #trainwithshubham